Intentional Spaces — Physical AI as an interaction design material.

In short

Intentional Spaces digs into what physical AI as a material means for the world of interaction design. It aims to explore the possibilities and opportunities that arise when a space understands, reasons, and interacts with the people and things inside of it. How our experiences with our environment change, and our relationship with technology transforms through physical AI.

As AI understands more of the physical world, it enables us to make sense of our surroundings in new and easier ways.

Partner

Intentional Spaces is a collaboration with Archetype AI, a San-Francisco based startup born out of Google's ATAP department (project Soli). Archetype AI leads the way in developing a Large Behavior Model, which makes sense of the physical world around us by fusing sensor data with natural language. Check the video bellow for more info!

Partner

Archetype AIExplorations

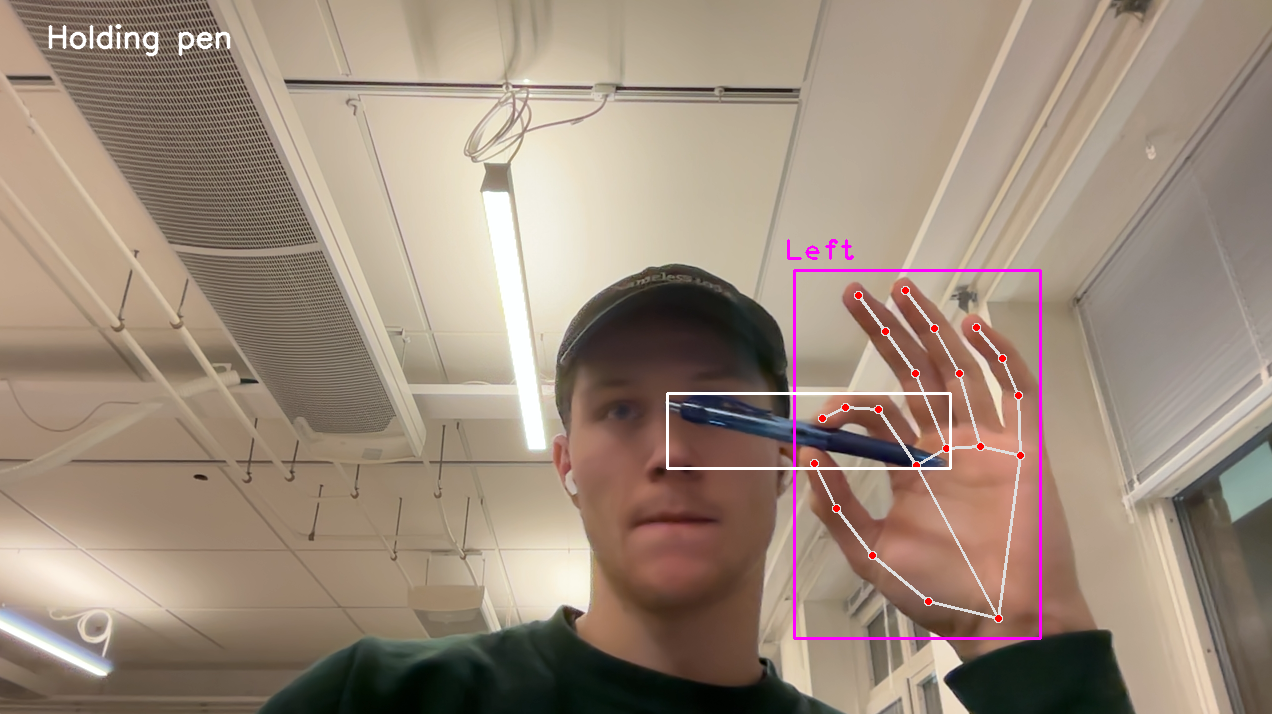

Zero-Shot Object Detection

Hand overlapping with input prompts to look for "a photo of a pen".

We should not look at models in silos, rather at glueing the right ones together, leveraging their unique qualities.

Accelerometer Analysis

LLM making sense of raw sensor data.

The more context an LLM has of data, the more accurately it can reason about it.

Haptic Glove

Feedback through haptics, incorrect is a quick double pulse, correct is a slow single pulse.

Translating one sense into another, vision into haptics.

Stethoscope

Understand the patient together with AI. Stay in the moment with the other person, while listening to the heart beat and getting insights from the AI.

Empowering humans to stay at the centre, instead of replacing them.

Binoculars

AI powered depth camera. Switch between color and depth view, and get insights from the AI about the main object in the viewport.

AI makes sense of the world in a different way.

Sketch Assistant

What if you could sketch with AI together in the physical world? Using traditional tools with an AI layer projected on top.

AI can inspire us, if we give it space to do so.

Dynamic Actuators

An LLM deciding what interaction is suitable for the context and the prompt. It can communicate to the person through the desk lamp, the fan, the waving arm and/or the speaker.

AI can reason about suitable interactions, with enough context. The relation becomes dynamic, the AI can move from background to foreground, from agent to instrument.

AI as a 6th sense

What if the space understands your intention, and it could let you feel the relevant information of an object when you interact with it, like a 6th sense.

In this example, a person wants to know if the parcel might be damaged. The AI will pick the most relevant history of the sensor data, and show it through haptics to give the person an understanding of how the parcel was handled, and whether it might be damaged or not.

Without changing an object, a whole new personalised set of interactions to make sense of them in their context comes into existence.

Physical context as prompt

The doctor examines the patient's knee, the AI comprehends this intricate context and generates insights on demand based on all available data – for example medical records, history and scans.

The doctor can express its need for assistance by looking at the screen, indicating he wants the AI to share its insights through that actuator. Subtle facial expressions can be used to get new suggestions, or enlarge them.

When AI can sense our physical context, it can reason about our intention in that moment – with those people, interacting with those object – and support accordingly.

Recap

The series of interventions present a glimpse into the landscape of interactions enabled by physical AI. What it could be like to co-exist with an autonomous, social, reactive and pro-active entity that interacts with us in the physical world.

Consider that all things have character, even if they don't. Don't underestimate human complexity, emotions, moods, cultures and ethics when working with AI.

Still curious to learn more? Check out the full report or listen to the podcast bellow, generated by NotebookLM.